Artificial intelligence is quickly gaining traction across professions by streamlining data processing and increasing overall workflow efficiency. As the technology continues to advance and move into fields of medicine, some people are asking the question, “Can AI really perform at the same level as—or better than—doctors?” That was the case in one recent study conducted by New York Eye and Ear Infirmary of Mount Sinai NYEE and published in JAMA Ophthalmology, which found that AI was able to match or outperform human specialists in the management of glaucoma and retinal disease.

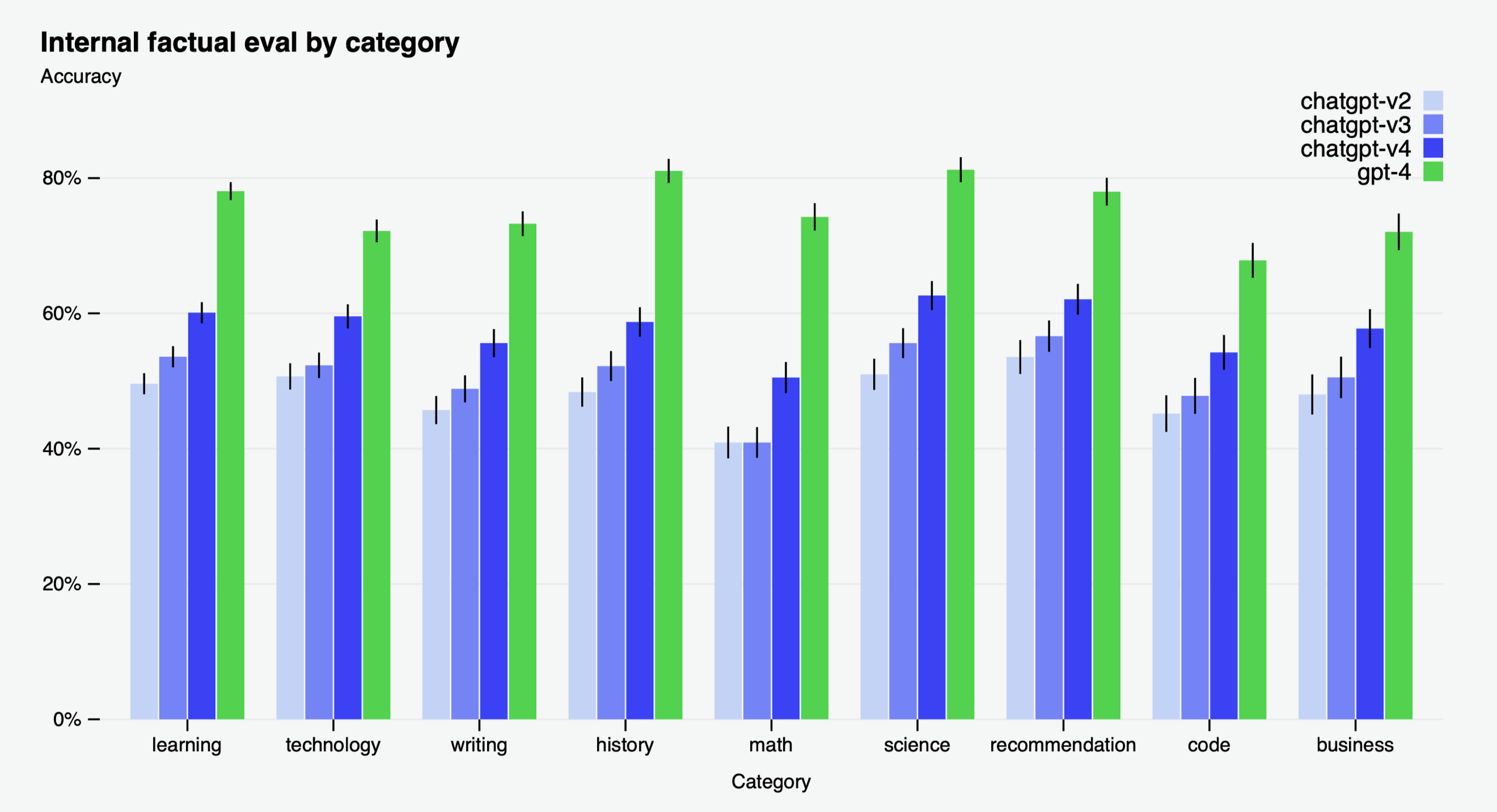

The comparative cross-sectional study presented ophthalmological and real patient case management questions to a large language model (LLM) chatbot (GPT-4, OpenAI) pertaining to the diagnosis and treatment of glaucoma and retinal disease (10 questions about each condition). The responses were then compared with those of fellowship-trained glaucoma and retina specialists, including 12 attending physicians and three senior trainees, and assessed for diagnostic accuracy and comprehensiveness based on a Likert scale.

|

| AI technology likely has a future role in the diagnosis and management of glaucoma and retinal disease, based on this study’s findings that it performs with equal or better diagnostic accuracy than human specialists. The latest generation, ChatGPT-4, notched substantial performance gains over previous iterations. Photo: OpenAI. Click image to enlarge. |

The study authors reported the following results from their statistical analysis of the data:

- The combined question-case mean rank for accuracy was 506.2 for the LLM chatbot and 403.4 for glaucoma specialists, and the mean rank for completeness was 528.3 and 398.7, respectively.

- The mean rank for accuracy was 235.3 for the LLM chatbot and 216.1 for retina specialists, and the mean rank for completeness was 258.3 and 208.7, respectively.

- The Dunn test revealed a significant difference between all pairwise comparisons, except specialist vs trainee in rating chatbot completeness.

- The overall pairwise comparisons showed that both trainees and specialists rated the chatbot’s accuracy and completeness more favorably than those of their specialist counterparts, with specialists noting a significant improvement in the chatbot’s accuracy.

- “This study accentuates the comparative proficiency of LLM chatbots in diagnostic accuracy and completeness compared with fellowship-trained ophthalmologists in various clinical scenarios,” the authors wrote. “The LLM chatbot outperformed glaucoma specialists and matched retina specialists in diagnostic and treatment accuracy, substantiating its role as a promising diagnostic adjunct in ophthalmology.”

In a press release from Mount Sinai, their team explains the significance of this provocative study, such as the potential role of advanced AI tools in offering eyecare providers support in the diagnosis and management patients with glaucoma and retina disorders.

“AI was particularly surprising in its proficiency in handling both glaucoma and retina patient cases, matching the accuracy and completeness of diagnoses and treatment suggestions made by human doctors in a clinical note format,” commented senior study author Louis R. Pasquale, MD, deputy chair for Ophthalmology Research for the Department of Ophthalmology. “Just as the AI application Grammarly can teach us how to be better writers, GPT-4 can give us valuable guidance on how to be better clinicians, especially in terms of how we document findings of patient exams,” he says.

Lead author of the study, Andy Huang, MD, an ophthalmology resident at NYEE, noted in the press release that while additional testing will be needed before this technology is implemented into practice, “The performance of GPT-4 in our study was quite eye opening.” He says that their team “recognized the enormous potential of this AI system from the moment we started testing it and were fascinated to observe that GPT-4 could not only assist but in some cases match or exceed, the expertise of seasoned ophthalmic specialists.”

Dr. Huang concludes by stating that “For patients, the integration of AI into mainstream ophthalmic practice could result in quicker access to expert advice, coupled with more informed decision-making to guide their treatment.”

Huang AS, Hirabayashi K, Barna L, Parikh D, Pasquale LR. assessment of a large language model’s responses to questions and cases about glaucoma and retina management. JAMA Ophthalmol. February 22, 2024. [Epub ahead of print]. |