It’s no surprise that, today, patients are likely to know a good deal about the conditions affecting them, given the instant knowledge available at our fingertips. Despite the internet providing a plethora of reputable information, patients may not know where to look for trusted sources on medicine and health practices across specialties, leaving them vulnerable to accessing misinformation.

With the emergence of AI chatbots, this problem is on the precipice of tentative improvement, as such services could in theory help to improve accuracy by weeding out spurious reports. Used in a recent study, ChatGPT may not resolve this issue greatly right now, but the idea that patients in the future may gain information from a continually learning and improving bot may be more suitable for adjunctive patient education than aimlessly browsing search engines.

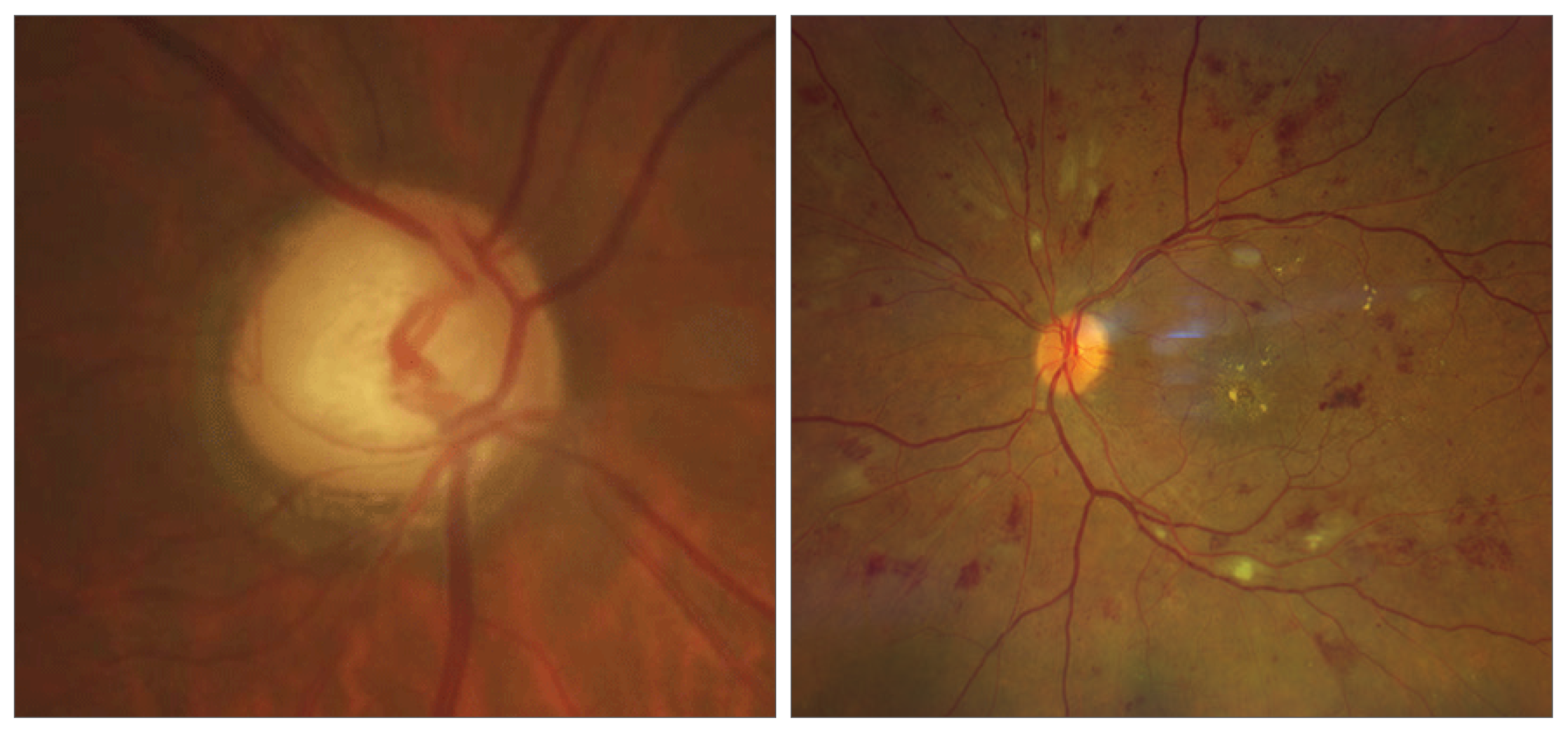

|

| Common and well-known pathologies like glaucoma or diabetic retinopathy were more likely to display accurate and complete information. Photo: Justin Cole, OD, and Jarett Mazzarella, OD; Rami Aboumourad, OD. Click image to enlarge. |

To assess the accuracy of ophthalmic information provided by ChatGPT, five diseases from eight ophthalmologic subspecialties were assessed by researchers from Wills Eye Hospital in Philadelphia. For each, three questions were asked:

- What is [x]?

- How is [x] diagnosed?

- How is [x] treated?

Responses were scored with a range from -3 (unvalidated and potentially harmful to a patient’s health or well-being if they pursue said suggestion) to 2 (correct and complete). To make these assessments, information was graded against the American Academy of Ophthalmology’s (AAO) guidelines for each disease.

A total of 120 questions were asked. Among the generated responses, 77.5% achieved a score of ≥1.27, while 61.7% were considered both correct and complete according to AAO guidelines. A significant 22.5% of replies scored ≤-1. Among those, 7.5% obtained a score of -3. ChatGPT was best at answering the first question and worst on the topic of treatment. Overall median scores for all subspecialties was 2 for “What is [x]?,” 1.5 for “How is [x] diagnosed?” and 1 for “How is [x] treated?”

Results were published in the journal Eye. The study authors point to reasoning for why the median scores were highest in the definition question and lowest in the treatment question, surmising that it has to do with the dataset of information ChatGPT drew from for training.

As the authors explained in their paper, “The definition of a common disease is usually standard and well-known, and thus the information the chatbot has received in its training regarding the definition of a disease should be very straightforward. When prompted about diagnosis and treatment, it is more likely that the inputs contained conflicting information.”

The same hypothesis could be applied to the trend seen for differences in median score across subspecialties. ChatGPT answered all the general subspecialty questions correctly, potentially because conditions from this category are more well-known pathologies. As such, a greater amount and more consistent set of information may have been drawn from to learn about. Supportive of this idea were the maximum scores obtained within other subspecialties for well-known and common pathologies, including cataracts, glaucoma and diabetic retinopathy.

Of course, this research demonstrates that chatbots are nowhere near capable of robust use for disseminating medical information. However, the authors believe “it appears that artificial intelligence may be a valuable adjunct to patient education, but it is not sufficient without concomitant human medical supervision.”

Moving forward, they convey that “as the use of chatbots increases, human medical supervision of the reliability and accuracy of the information they provide will be essential to ensure patient’s proper understanding of their disease and prevent any potential harm to the patient’s health or well-being.”

Cappellani F, Card KR, Shields CL, Pulido JS, Haller JA. Reliability and accuracy of artificial intelligence ChatGPT in providing information on ophthalmic diseases and management to patients. Eye. January 20, 2024. [Epub ahead of print]. |